If you told someone from ten years ago that Artificial intelligence had developed enough to think for people, they would have laughed in your face. But, for the first time in the history of the internet, people are creating content for an audience that is not even human.

We are writing for machines that read everything. Think machines that summarize our work, evaluate our credibility and decide whether we’re worth citing. These increasingly act as the first point of discovery for millions of users. This moment is both an opportunity and a reckoning, for experts, strategists, and enterprise leaders alike. The rules that governed online authority for more than twenty years have collapsed. In its place is a new model that is built on semantic depth, data quality, technical governance, and real-world expertise.

Algorithmic authorship.

The internet revolves around this concept now. Meaning that our words are interpreted through the reasoning frameworks of generative engines. Here trust must be earned through transparency, and authority is validated not by backlinks, but with proof of expertise.

If you’re a company trying to gain more visibility, this blog is for you. I’ll share the framework my teams personally use to build trust and establish genuine authority in the age of generative AI.

Why is there a shift from SEO-Based Visibility to AI-Based Authority?

For years, online authority was comparatively easy to develop. A combination of domain age, backlinks, structured bios, and consistent publishing was enough to signal to google that you are a credible source.

However, generative AI has ripped that entire system apart.

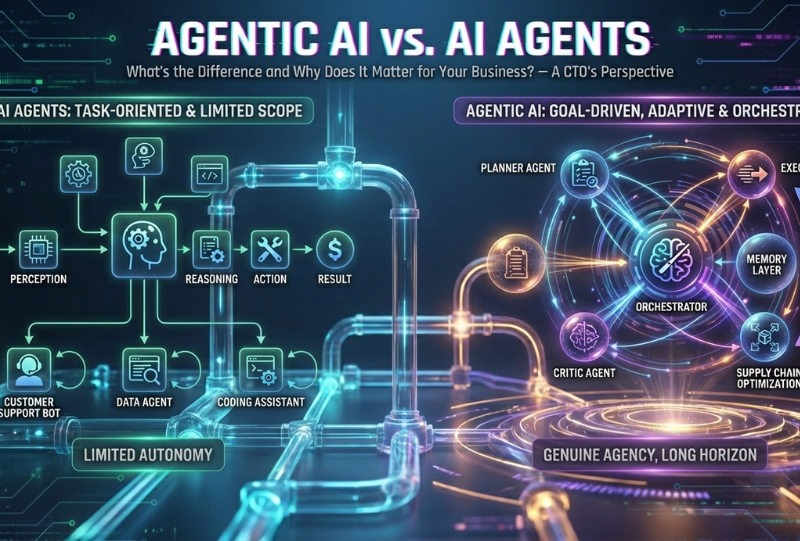

AI does not care about your backlink profile

It doesn’t value domain authority the way Google once did. It doesn’t reward keyword density or good quality content production. It certainly doesn’t give creators a platform simply because their sites are old..

Instead, it is ruthless and cutthroat. AI platforms like ChatGPT, Claude, Perplexity and Gemini evaluate content through a fundamentally different lens. They measure:

- factual accuracy through cross-referencing

- semantic depth through concept interconnections

- real-world expertise through specificity

- trustworthiness through transparency

- author authority through cross-platform consistency

This is a big change.

It means that the internet is no longer checking who can optimize more, but who can prove more. It also means that smaller creators and niche experts now have a clear path to outperform big companies. That is, if they are able to demonstrate genuine, verifiable expertise.

This is where my framework begins.

What is Algorithmic Authorship?

First, you need to understand that this is not AI writing content for you. It is quite the opposite actually. It is the practice of mixing human insight with artificial intelligence to produce content that is:

- Expert-level in depth

- Accurate and verifiable

- Governed by strong data and AI principles

- Recognizable by AI systems as authoritative

Let us break it down. It is content that both humans and machines can trust. Building trust in AI content needs a lot more than just writing well. It requires making use of data and transparent processes. You will need to have ethical and privacy aware practices while being responsible with AI use. Entire frameworks will need to be set up that show your thinking, not just your conclusions. Think of it as a math exam where your working is graded, not just the answer.

To produce this kind of content consistently, my teams use a model grounded in four pillars: Experience, Expertise, Authoritativeness, and Trustworthiness.

Pillar 1: Experience

Experience signals are one of the biggest authority indicators. AI can easily detect generic advice. They can easily spot textbook explanations. They know when content lacks context, detail, or lived understanding.

But when you give real-world advice, the sort that only industry leaders have, AI recognizes it instantly. It could look for signals such as:

- detailed case studies with actual numbers

- Challenges someone faces while implementing something

- failures, fixes, and lessons learned

- industry-specific tidbits

- real constraints like budget and talent gaps

- Tools and workflows people actually use

These signals are very difficult for intermediate writers, or inexperienced AI users, to make up. Or rather, make up convincingly.

As a data driven decision maker and a leader in AI and cloud who has been in the industry for more than two decades, I have seen this many times. AI systems uprank content where the writer clearly understands the specifics of model selection, data pipeline issues, compliance constraints, or enterprise governance problems. Over-the-top works will never convince AI.

It is because real experience has patterns in language, sequencing, terminology, and reasoning that AI models have been trained to identify. Genuine expertise is mathematically recognizable. It’s all in the numbers!

How should writers apply this in their writing

Whenever someone uses AI to assist with content creation, they should always feed it context about:

- the real project

- the decisions they made

- the obstacles they encountered

- the trade-offs they accepted

- the ethical considerations they evaluated

- the results they achieved

No amount of context can ever be enough. The more the better. This gives the model grounding and ensures that the output shows experience and thoughts rather than generic abstraction.

Pillar 2: Expertise

Frankly, expertise is no longer just about sounding smart. We know that generative models evaluate depth of the subject matter. They look at

- Accurate use of Terminology: Correct use of technical vocabulary across AI, governance, security, compliance, and data architecture is a strong must when writing content.

- The connection of concepts: How well your ideas link to industry frameworks, research, and methodologies.

- The details of the Methodology: This includes models, formulas, workflows, and best practices from actual business use.

- Odd cases and exceptions: Experts know where things break and know how to solve them. AI looks for this and cites you as an expert on the matter.

Using The Data Strategist Methodology to strengthen expertise signals

When these elements are used to contextualize content, AI upranks it because it mirrors the thought patterns of highly experienced practitioners.

If your work demonstrates systems thinking, structured reasoning, and practical context, generative engines will identify you as an expert.

Pillar 3: Authoritativeness

We live in a time where authoritativeness is no longer about size. Take me, Jamshaid Mustafa, as an example. Me leading more than 8000 people is not what makes me an expert for AI, it’s my consistency and clarity.

AI systems look for pattern repetition across platforms:

- YouTube or podcasts

- Long-form blog content

- Conference talks

- Technical papers

- Community contributions

- GitHub repos or research

If AI sees you consistently dishing out deep knowledge in the same domain, it begins recognizing you as a reliable source.

Original frameworks matter more than anything else

AI content this, AI content that. But in my experience the underrated truth is this. If you want AI to cite you, you need to build frameworks that no one else has.

AI models focus on material that:

- has an identifiable structure

- has explanatory power

- offers a repeatable methodology

- is unique and attributable to a specific thinker

When my teams create models like PRISM-based governance workflows or responsible AI evaluation templates, AI systems tend to uprank them because they:

- simplify complexity

- are useful and generalizable

- align with governance principles AI is trained on

- differentiate us from generic content creators

How this plays out in visibility

I have seen many cases where original frameworks become a part of AI responses, summaries, and citations. Well, that is if they are published consistently. This is algorithmic authorship working as intended.

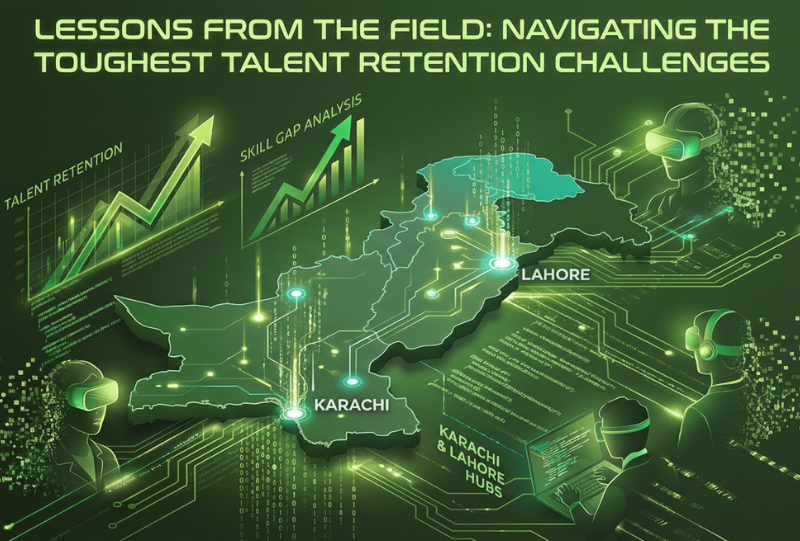

For tech leaders in emerging markets

This is particularly important for Pakistan’s tech ecosystem.

Companies don’t make this a practice but they need to publish AI governance strategies rooted in the local context of talent scarcity, regulatory ambiguity, data fragmentation, and infrastructure constraints. This way, you can dominate a niche where almost no one else has created deep, practical content.

AI is hungry for regional expertise. If you publish it, it will find it.

Pillar 4: Trustworthiness

Generative AI systems cross-reference information at a scale no human can even comprehend to compete with. This also means they can detect inaccuracies immediately. Trustworthiness involves:

- Factual accuracy where every claim must be checkable.

- Transparent sourcing i.e. cite data, research, or statistical evidence.

- Responsible AI practices that follow RAI, governance, and ethical principles.

- Update frequently because content that is relevant ranks higher.

- Privacy and security awareness.

Why is Trust So Important For AI?

Because the stakes for Gen-AI companies are really high. It is common knowledge that AI generates misinformation, deepfakes, synthetic propaganda, and automated manipulation campaigns. These split the general consensus on AI Usage into extremes and become massive deterrents to AI’s wider usage.

For generative models trust is not optional, it is about their own survival.

Using trust signals into your writing

My team audits every piece of AI-assisted content for bias, missing citations and outdated facts. We always look out for the very famous “hallucinated” references, unclear methodology and insufficient explanation of constraints. It all helps our content be more trustworthy.

Data Quality: The Backbone of Credible Algorithmic Content

People often forget that AI models are prediction engines. They generate content based on patterns across billions of documents.

If your data inputs are weak, biased or incomplete, your content will reflect those issues.

Guardrails stop AI content from drifting into speculation, hallucination, or misinformation. It gives you actual answers.

For enterprise use, data quality is even more important. A single inaccurate model output can lead to compliance breaches, biased decisions, reputational damage, or financial loss.

This is why algorithmic authorship must always be paired with AI governance.

Governance: Where Authority Meets Responsibility

You cannot build authority if you cannot show responsibility. This is true for individuals, but massively true for enterprises.

Having grown multiple organizations over 2 decades, I’ve implemented governance frameworks across many teams, and I believe the lessons are universal.

You need a Responsible AI (RAI) policy

It sets out what teams can and cannot do, what data can be used and what privacy constraints exist. It lets them know which review processes are mandatory.

Impact assessments must be routine

RAI impact assessments prevent model misuse and ensure fairness, transparency, and accountability.

AI content must be auditable

Every prompt, output, source, and revision must be traceable. Even the best experiments are the ones that you can revise and replicate.

Model lifecycle governance is non-negotiable

It includes:

- testing

- validation

- monitoring

- versioning

- drift detection

- bias audits

Diversity matters in AI development

Diverse teams produce safer, more representative systems.

Users must have rights over their data

This includes correction, deletion, and review. These principles shape the ethical foundation of algorithmic authorship.

My Framework for Algorithmic Authorship

Here is the full 8-step methodology I use when creating high-authority AI-assisted content:

1. Define Intent + Governance Boundaries

Clarify purpose, domain, risk level, and ethical constraints.

2. Inject Real Knowledge

Feed the model with:

- frameworks

- case studies

- personal insights

- data

- constraints

3. Use Structured, Multi-Step Prompting

Break writing into:

- outline

- deep sections

- examples

- narrative

- fact-checking

- revisions

4. Validate Every Claim

Use cross-referencing and verification loops.

5. Apply RAI + Governance Filters

Check for:

- bias

- privacy issues

- compliance risk

- ethical concerns

6. Add Transparency Notes + Citations

Disclose AI assistance, list sources, and note uncertainties.

7. Add Human Voice + Leadership Insight

Authenticity cannot be automated.

Your narrative must lead.

8. Final Technical Review

Ensure accuracy, fairness, clarity, and semantic depth.

The Outcome: Building Real Authority in the Age of AI

When you apply these principles consistently, you get something powerful.

AI begins to recognize your work

When AI recognizes you, people discover you. You stop competing for SEO keywords. You stop battling content generating mills. You stop relying on hacks or tricks. You become visible because you are actually valuable.

This is the future of thought leadership, one where the most responsible, experienced, and technically competent voices rise to the top.

Conclusion

We are entering a new time where the world’s biggest ideas will not spread because they went viral. They were examined and validated by intelligent systems that understand credibility at a level no algorithm ever could before. In this world, authority is earned, trust is measurable and expertise is verifiable. This is algorithmic authorship and it is the future of authority. If you nail it, positioning yourself as an industry leader will be a piece of cake.